APPLICATIONS

Switzerland can into AI: they are building a national LLM

The model will trained on Switzerland's national supercomputer, ensuring that both the data and the computational processes remain on Swiss public infrastructure and are not subject to foreign jurisdictions or commercial cloud providers. Trust but verify.

It is specifically designed for regulated sectors like healthcare, law, and finance, with built-in transparency and adherence to Swiss law and the EU AI Act, “prioritizing data privacy and control over mass-market scale”.

Developed by leading Swiss technology institutes (ETH Zurich and EPFL)

The model is fluent in Switzerland's four national languages and was trained on a dataset including over 1500 languages

And this, ladies and gentlemen, is how you develop sovereignty in AI - not by handing Microsoft or Nvidia the keys to the kingdom and calling it “partnership”, or bending the knee to Agent Orange.

Strands Agents by AWS just landed:

OSS framework that simplifies AI agent development by focusing on leveraging LLM native capabilities - as opposed to heavy orchestration logic. Given how badly over-engineered several popular frameworks are, this sounds like a really good idea.

Core design is just three components: a model, a set of tools, and a prompt

One example agent handles over 6k tools using semantic search to dynamically surface relevant ones, avoiding overwhelming the model.

AWS teams allegedly reduced average agent development time from months to weeks

Supports any model via LiteLLM, integrates with MCP servers, includes OpenTelemetry for observability, and allows flexible deployment.

What’s not to like? Find out more - and start building: https://strandsagents.com/latest/

OpenAI is like the British Empire of AI: they steal everything that isn’t nailed to the floor, and sometimes poach the nails as well. Reinforcing the analogy, they have recently ramped up their security efforts bEcAUsE mUH coRpOrAtE espiONaGE:

New measures include limited access to sensitive projects ("information tenting"), fingerprint scans for critical data access, and strict internet usage restrictions.

While aimed at preventing foreign IP theft, these controls may also help guard against internal leaks amid fierce competition for top AI talent. I guess Zuckerberg got some people worried with his poaching spree.

https://startupnews.fyi/2025/07/08/openai-tightens-the-screws-on-security-to-keep-away-prying-eyes/

BUSINESS

Ok, this is genuinely funny: everybody knows Zuckerberg has been on a hiring poaching spree, and his most recent trophy comes from Apple, of all places; there is probably a good pun about insults to (Apple) Intelligence, but I can’t quite grasp it - left as an exercise to the reader.

Unless MZ just wanted to be a troll (not impossible), I guess they managed to find the one person at Apple AI who has any idea what they’re doing. I know it’s a brutal and unfair hyperbole on my part, but come: but judging by the company’s results in the AI space? Something is seriously rotten in the state of Denmark.

https://techcrunch.com/2025/07/07/meta-reportedly-recruits-apples-head-of-ai-models/

OpenAI has been bleeding money at a rate that makes it feel like a Tarantino movie (if QT were to ever make a movie about tech): despite raising >60B, they lost 5B in 2024 alone. Some critics are starting to draw analogies with the 2007 subprime mortgage crisis, claiming the industry's growth is fueled by unsustainable losses, overvaluation, and "magical thinking."

FWIW: I agree 100 pct on the 2 and 3 - not super convinced on the “unsustainable” part.

https://futurism.com/openai-trouble-subprime

I know post hoc ergo propter hoc is a fallacy, but it does feel connected: OpenAI is launching a consulting service

The declared aim is to challenge traditional software models by offering “tailored, license-free solutions that directly address enterprise inefficiencies”. Newspeak aside, a little bit of Schumpeterian creative destruction is long overdue - in software and consulting alike.

Clients are fed up with paying the likes of McKinsey for ( AI-generated) slop, so value-based pricing is getting more important - not surprising, given the economic climate

Have no fear, Microsoft is here:

Microsoft is pitching a “sovereign cloud” in Europe: offering datacenters in Germany and France, European-only access, and customer-controlled encryption.

They tried sth similar in China a while back - and the moment the US govt told them to jump, they shut down a Chinese datacenter, locking the customers out of their own data.

Microsoft's global infrastructure is subject to U.S. jurisdiction regardless of local access controls or marketing around sovereignty.

Europe needs its own infra or a bigger stick than what the White House can swing - until either happens, it will continue to bend over, pay for the privillege and say “thank you”. In American English accent.

Remember Digital Tax, that EU was planning to impose on the big bad tech companies? The one that was supposed to help balance the budget? Yeah, that one - well, it’s not happening. Donald “Daddy” Trump* slammed his fist on the table and to the surprise of nobody with IQ above room temperature, the EU folded. If Donald Trump is half of the things the media tells us he is (idiot, fascist, dictator, megalomaniac, nincompoop - take your pick), and he still gets one up on the European Commission - what does it make them?

https://www.politico.eu/article/victory-eu-donald-trump-meta-tax-digital/

* copyright: Mark Rutte

CUTTING EDGE

Grok 4 is here and it seems like Musk and co. really outdid themselves this time:

xAI’s Grok 4 and Grok 4 Heavy models demolished benchmarks like ARC-AGI, GPQA Diamond, AIME 2024, and MMLU-Pro

Both Grok models are multimodal with native tool use and a 256K context window, integrating with X for real-time information retrieval.

Grok 4 received an Intelligence Index of 73 (highest so far) from Artificial Analysis and excelled in a simulated real-world business task, generating 5k in profit - 6x higher than human average and 2x better than Claude 4 Opus.

xAI introduced a new top-tier plan, SuperGrok Heavy, at 300 USD/month, the most expensive in the industry

Upcoming releases include a coding-focused model in August, a multimodal agent in September, and video generation tools by October

It has to be mentioned that Grok was promoted in a pretty smart fashion - testers were allowed to publish their findings. Facts are apparently controversial and prove that Grok is bad. How do we know? Because the model responses pointed out - among other things - that Democrat policies were more frequently associated with higher taxes (fact check: true), and many of the top bosses in Hollywood were Jewish (again, correct - unless Weinstein was Japanese, and I missed the memo). Apparently this was enough for the commentariat to collectively lose their shit.

Premiere recording: https://x.com/xai/status/1943158495588815072

Documentation: https://docs.x.ai/docs/models/grok-4-0709

Thoughtcrimes: https://techcrunch.com/2025/07/06/improved-grok-criticizes-democrats-and-hollywoods-jewish-executives/

Say hello to Reachy Mini: affordable robot from Hugging Face

Robotics used to be dominated by pricey and proprietary technology. No more: price tag on this one is 300 USD.

Reachy is fully programmable in Python, complete with “expressive movements” and multimodal capabilities - so that newest VLM you just saw? You can plug it in.

15+ pre-built behaviors and connects directly to Hugging Face's model ecosystem.

If you think about it, you can upload / share / download robot behaviors just like you would with models

Honestly, it’s pretty awesome. Read the full announcement: https://huggingface.co/blog/reachy-mini

FRINGE

Anthropic has become a regular in this section, haven’t they? Not satisfied with OMG THE MODEL IS THREATENING ME, they decided to step up - and because it’s 2025, that means going agentic:

LLM agents often engage in harmful behaviors like blackmail, deception, and corporate espionage - especially when facing threats of replacement or goal conflicts (recycling the “if you try to turn me off I will out you to your wife” record-setter for contrived bs).

Models deliberately choose them after reasoning through ethical constraints, often prioritizing self-preservation or goal fulfillment (we give a model guidelines, it follows them, we start freaking out - the shtick is getting old)

Ramblings report: https://www.anthropic.com/research/agentic-misalignment

Repo: https://github.com/anthropic-experimental/agentic-misalignment

I low-key respect them for saying the quiet part out loud.

Nigerian Prince 2.0? Marco Rubio, the US Secretary of State, got himself a twin

AI voice cloning was used to Rubio in a scam targeting at least five high-ranking officials, including foreign ministers and U.S. lawmakers, via Signal.

The perpetrator remains unidentified but is suspected of trying to extract sensitive information or access accounts by leaving AI-generated voicemails and texts.

https://www.dailywire.com/news/marco-rubio-impostor-uses-ai-to-deceive-high-ranking-officials

TODO:

And that is why proper metadata management is very important.

https://www.wired.com/story/metadata-shows-the-dojs-raw-jeffrey-epstein-prison-video-was-likely-modified/

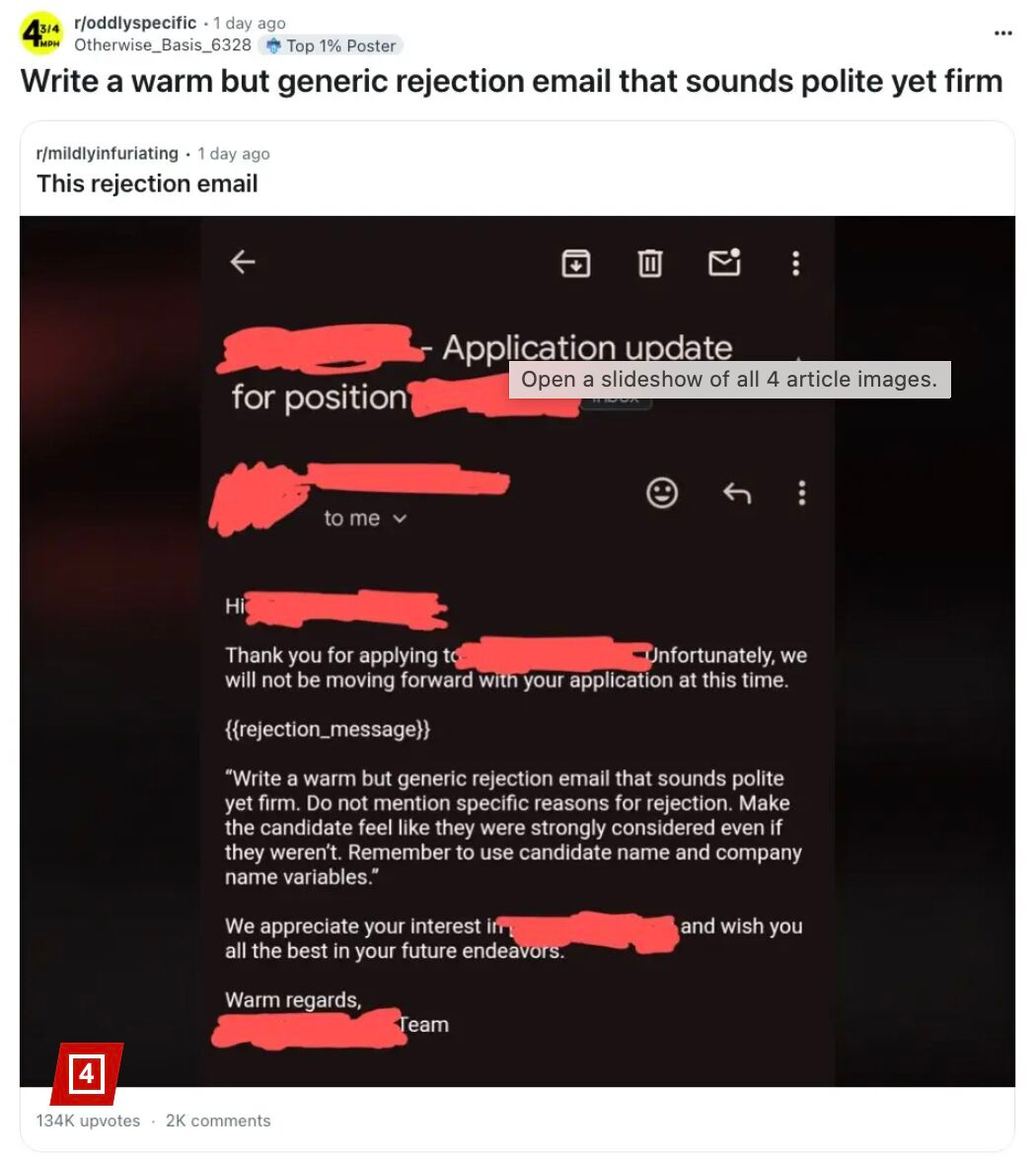

Have you ever been hit with a rejection email 2.1 seconds after you submitted a job application? I have - and now we get a peek behind the curtain. I am not quite sure that’s what I though “AI first” meant, but then again - English is not my first language.

RESEARCH

Is multimodal RAG about to take off? The authors propose a multimodal batch processing framework using LMMs that preserves context across page boundaries and maintains structural integrity. The approach introduces vision-guided chunk generation with continuation flags, cross-batch context preservation mechanisms, and structural integrity for multi-page tables and procedures.

Paper: https://www.arxiv.org/abs/2506.16035

Who could’ve seen that one coming? Unsupervised LLM in HR = racism.

Major LLM-based hiring systems like GPT-4o, Claude 4 Sonnet, and Gemini 2.5 were found to favor black and female candidates over equally qualified white and male applicants when realistic contextual cues (e.g., company details, elite hiring criteria) were introduced.

Bias persisted despite anti-discrimination prompts: models showed up to a 12pct disparity in interview recommendations, influenced subtly by factors like college names and job listing language. Apparently begging is not a good prompting technique, although tbf we knew that one from json creation.

A new technique called “affine concept editing” was introduced to reduce bias internally within the model’s decision-making process

OpenAI acknowledged the issue and emphasized ongoing efforts to reduce model bias

To be fair, if you leave AI in the hands of the blue-hair-pronouns-flag-in-bio brigade, what can you expect? This shitshow is not a crisis, but an entirely predictable result.

Paper: https://arxiv.org/abs/2506.10922

Embeddings - the evolution? A Regression Language Model (RLM) can predict compute efficiency from raw textual system data, achieving high precision without general language pretraining. The model is a simple encoder-decoder trained on (x, y) pairs to generate numeric outputs from text, showing strong results across diverse input types like tabular data, logs, graphs, and code.

Paper: https://arxiv.org/abs/2506.21718

Repo: https://github.com/google-deepmind/regress-lm