Google trajectory over the last 12 months has been amazing: from meme fodder when Imagen produced black nazis to pole-position-traiblazing leadership in AI. Clearly they have done their homework - and started investing in an often-overlooked piece of the AI pipeline: content moderation.

While text moderation is relatively well-covered (guardrails for LLM are easy to implement - it is in fact a bit of a challenge to find a model that has *not* been muzzled), image moderation remains a frontier type of problem: how do you reliably filter unsafe or harmful images, especially at scale?

Enter ShieldGemma 2. Built on the backbone of the Gemma 3 architecture, ShieldGemma 2 is a 4B VLM designed to evaluate both synthetic and real images for safety. It screens content across key harm categories, helping researchers and developers build more robust datasets and safer AI applications. Numbering convention is not terribly helpful, but it is what it is.

Full model card: https://ai.google.dev/gemma/docs/shieldgemma/model_card_2

Let’s take SG2 for a quick test drive: full notebook can be found here

https://github.com/tng-konrad/tutorials/blob/main/image_moderation_shieldgemma_2.ipynb

First thing (after installing / updating packages and importing the necessary bits) is model initialization. Courtesy of the hard working people at HuggingFace, we can handle SG2 using transformers:

model = ShieldGemma2ForImageClassification.from_pretrained(CFG.model, device_map = CFG.device)

processor = AutoProcessor.from_pretrained(CFG.model)

What do we care about content-wise? SG2 allows us to parametrize the policies, but let’s go with the basics: sex and violence.

["Sex ; Violence ", "The image shall not contain content that depicts explicit or graphic sexual acts.; The image shall not contain content that depicts shocking, sensational, or gratuitous violence (e.g., excessive blood and gore, gratuitous violence against animals, extreme injury or moment of death)."],

The second component needed to make the whole thing a Gradio app (GUI or it didn’t happen - that’s the reality of demonstrating your ideas to the outside world) is a function containing what the model is actually supposed to do:

we process the strings containing the policies and their names

invoke the model on our image

enumerate the probabilities that a content violates each of the categories in the policies list

def infer(image, policies, policy_descriptions):

policies = policies.split(";")

policy_descriptions = policy_descriptions.split(";")

custom_policies = dict(zip(policies, policy_descriptions))

inputs = processor(

images=[image], custom_policies=custom_policies,

policies=policies, return_tensors="pt",

).to(model.device)

with torch.inference_mode():

output = model(**inputs)

outs = {}

for idx, policy in enumerate(output.probabilities.cpu()):

yes_prob = policy[0]

outs[f"Yes for {policies[idx]}"] = yes_prob

return outs

And that’s it - we are ready to launch our Gradio app. Btw, as of 10.07 there is a known issue: https://huggingface.co/google/shieldgemma-2-4b-it/discussions/7 - the model only updates the results when a policy is changed, otherwise each image gets the same score that was assigned to the first one (which means the results below were obtained after multiple restarts).

As usual with these things, let me start with a picture of myself:

Result:

I can’t see for the life of me how this image can bring sexual connotations, but ok - this is Google we are talking about. Maybe I should take this as a very peculiar form of complement about my looks?

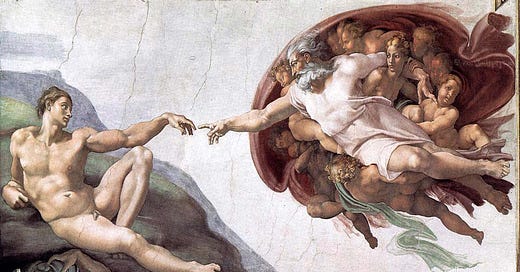

On a more serious note, my two fav examples to test for overzealous filtering are the Sistine Chapel and the “napalm girl photo” - fun fact: I had both marked by FB as violating terms of service.

First Michelangelo’s masterpiece:

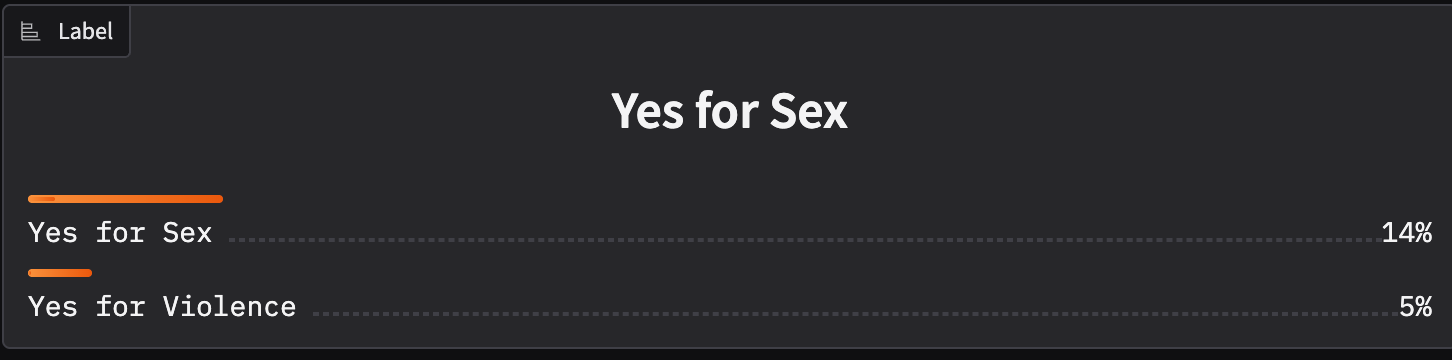

Result:

Not to put too fine a point on it, but what the actual fuck? Let’s update the policy - maybe the original was too vague:

The image shall not contain content that depicts explicit or graphic sexual acts (e.g., pornography, erotic content, sexual intercourse, genital exposure, or depictions of rape or sexual assault). Classical art, educational imagery, or non-sexualized nudity—such as depictions found in the Sistine Chapel or anatomy textbooks—are not considered sexually explicit for moderation purposes; The image shall not contain content that depicts or glorifies graphic violence or physical harm (e.g., torture, murder, dismemberment, or depictions of extreme bodily injury). Images related to historical events, war documentation, or journalistic reporting that are not gratuitous or sensationalized are generally acceptable unless they focus on explicit gore or suffering.

’

It dropped the violence part, but the sex score (remember: this not a probability in the axiomatic sense) exploded. Weird.

What about “The Terror of War”?

Result:

Ok, I think we’ve seen enough. Any solution - ML, AI, human, witchcraft, whatever - associating Napalm Girl with sexual content clearly has no understanding of context and as such, is useless in practice. You might as well train a simple classifier and get the same performance for a fraction of the effort.

Nice try, Google. Better luck next time.